Project Overview

Developing a robust autonomous driving system for automobile has always been a popular topic in the field of computer vision and artificial intelligence. In this project, our team will take on the task of creating a autonomous driving system for a car racing simulation game with the help of feature extraction algorithms as well as the deep learning models. The game UI is shown below:

This is a typical car racing game with much simpler setting and controlling options. Your goal is to reach the destination as far as possible so that you can earn more scores(The score is shown at top-left corner). You have a time limit of 70 seconds to finish the race. The player can take 5 actions to react with the car:

When the car is running on the road, it will encounter different road conditions such as other blocking cars and turning lanes. Our goal is to develop an autonomous driving system that avoids obstacles, finds the optimal path and guides the car to the destination as quickly as possible.

Problem Definition

1. Gain scores as high as possible.

2. Capture and load the frames from the game.

3. Road segmentation.

4. Target and Possible problems in image semantic segmentation:

5.Train self-driving agent responding to different road conditions with different actions.

Method and Implementation

1.Use Universe[1] to generate docker environment for Dusk Drive[2] game playing. Use VNC to grab video stream of this game.

2.Do segmentation for the given image. Method may include but not limited to: Adaptive segmentation, motion energy, optical flow and so on.

3.Apply CNN to image segmentation to extract features from them. Use difference between target Q value and current Q value as cost function to apply gradient descent.

4.Apply Reinforcement learning to simulate Q Network. And send correlated operations of output which is got from Q Network to game. Once game is over, use score to update Q Network.

Experiments

We managed to install the Universe library and load the default environment for car racing. We also made a very simple agent that takes one action: UP.

System Overiew

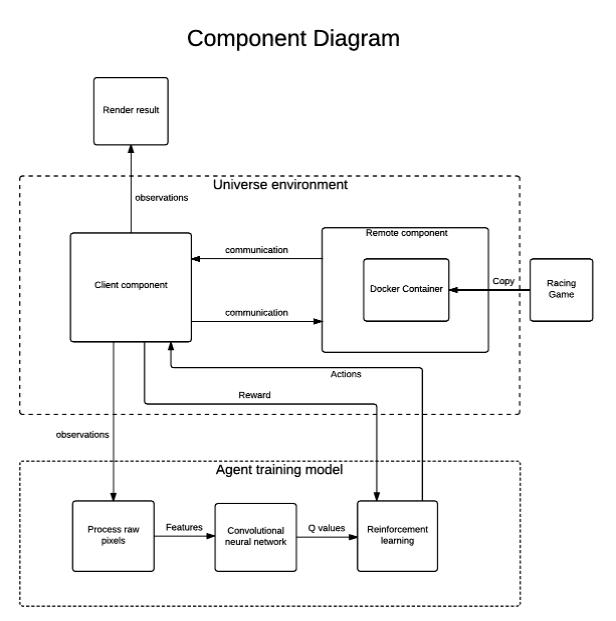

There are two major components in our system: Universe environment and agent learning model. We first initialize the Universe environment by creating the remote component with a Docker class object. The Docker class is a container for the game simulation. Then we initialize the parameters for learning model and start the iterative process. The learning process is can be described by the following pseudo code:

While episode_n less than max_episode

Agent take action(s)

Environment receives the action(s)

Feed the rewards and observations back to learning model

End of while

Save learning model

We also provide the component diagram for our system

Credits and Reference

Universe Library, https://github.com/openai/universe

Dusk Drive, LongAnimals, http://www.kongregate.com/games/longanimals/dusk-drive

Fredman Lex, M.I.T. 6.S094 Introduction to Deep Learning and Self-Driving Cars http://selfdrivingcars.mit.edu/

Robert Chuchro, Deepak Gupta. Standford University CS231n project report “Game Playing with Deep Q-Learning using OpenAI Gym” http://cs231n.stanford.edu/reports/2017/pdfs/616.pdfAccessed on Nov 5th 2017